Commits on Source (25)

-

David Schäfer authoredffac6189

-

David Schäfer authored

Add option to not overwrite existing flags to concatFlags See merge request !578

9c94bbd5 -

David Schäfer authoredd698725d

-

c3e4ec42

-

David Schäfer authored

harden and improve History See merge request !590

59f8b387 -

David Schäfer authorede0f35eb3

-

David Schäfer authored

Translation cleanups See merge request !579

788cd7fb -

David Schäfer authoredb7e72f87

-

David Schäfer authored

Add option to pas existing axis object to the plot function See merge request !597

5e10c7ff -

David Schäfer authoredd710cb91

-

David Schäfer authored

Change the default limit value of the interpolation routines See merge request !598

86fa51ae -

David Schäfer authored8b1866df

-

David Schäfer authored

Python version support See merge request !601

91f46948 -

David Schäfer authored8fa7b7b3

-

David Schäfer authored

Version bumps See merge request !602

a0723e77 -

David Schäfer authored67606463

-

David Schäfer authored58553d08

-

David Schäfer authored

fix plotting test See merge request !603

3ab57adc -

David Schäfer authored

changed value of the default parameter overwrite for concatFlags to False See merge request !604

0fa7170c -

David Schäfer authoredc6d999f2

-

David Schäfer authored

Release 2.3 See merge request !605

7a044374 -

David Schäfer authoreda7754359

-

David Schäfer authored

Release2.3 See merge request !606

53eb2d6f -

9c7ca044

-

David Schäfer authored

Inter limit fix See merge request !600

Showing

- .github/workflows/main.yml 7 additions, 7 deletions.github/workflows/main.yml

- .gitlab-ci.yml 0 additions, 17 deletions.gitlab-ci.yml

- CHANGELOG.md 20 additions, 2 deletionsCHANGELOG.md

- docs/Makefile 0 additions, 3 deletionsdocs/Makefile

- docs/cookbooks/MultivariateFlagging.rst 5 additions, 5 deletionsdocs/cookbooks/MultivariateFlagging.rst

- docs/cookbooks/OutlierDetection.rst 1 addition, 1 deletiondocs/cookbooks/OutlierDetection.rst

- docs/requirements.txt 1 addition, 2 deletionsdocs/requirements.txt

- docs/resources/data/hydro_config.csv 3 additions, 3 deletionsdocs/resources/data/hydro_config.csv

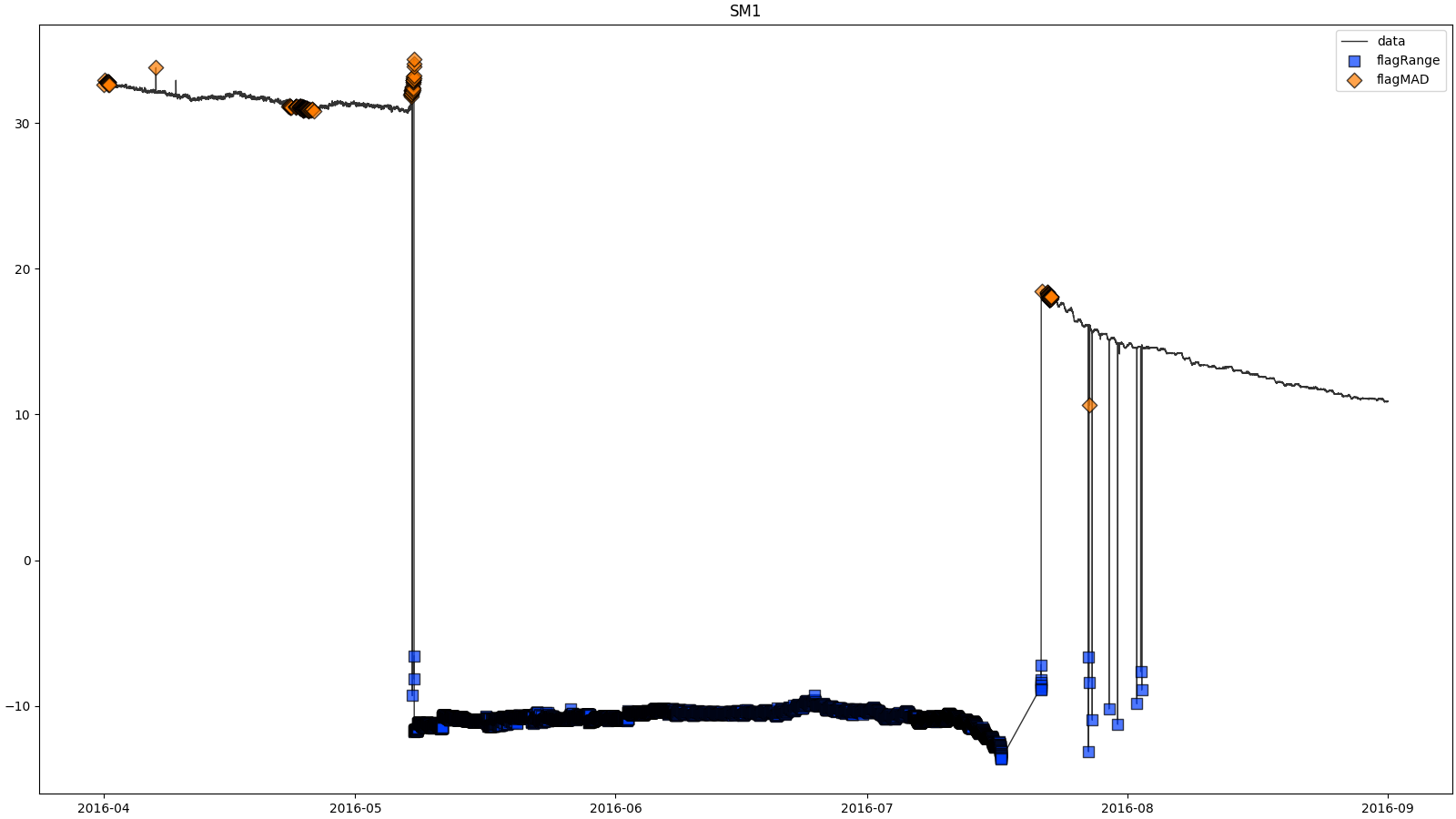

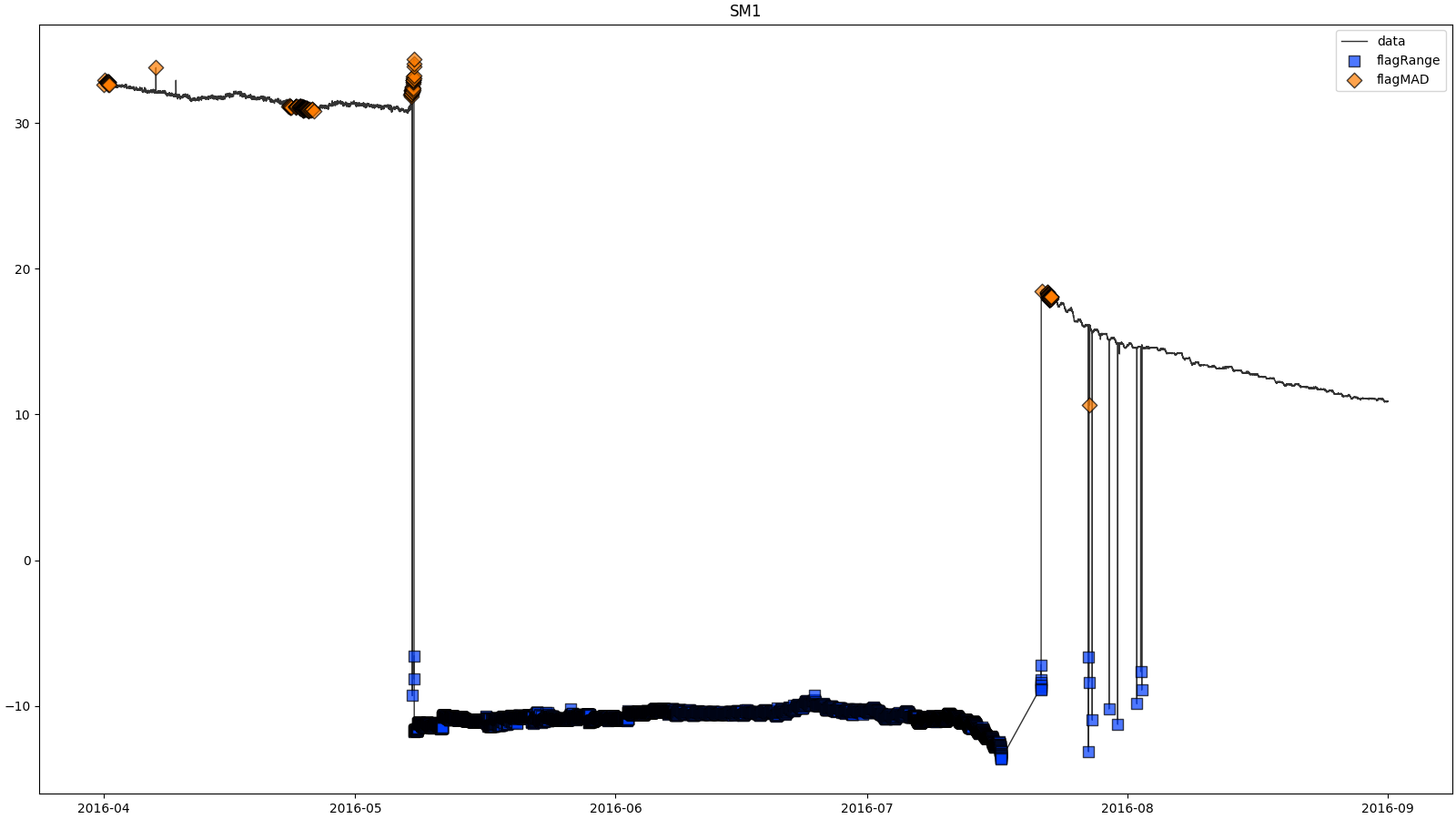

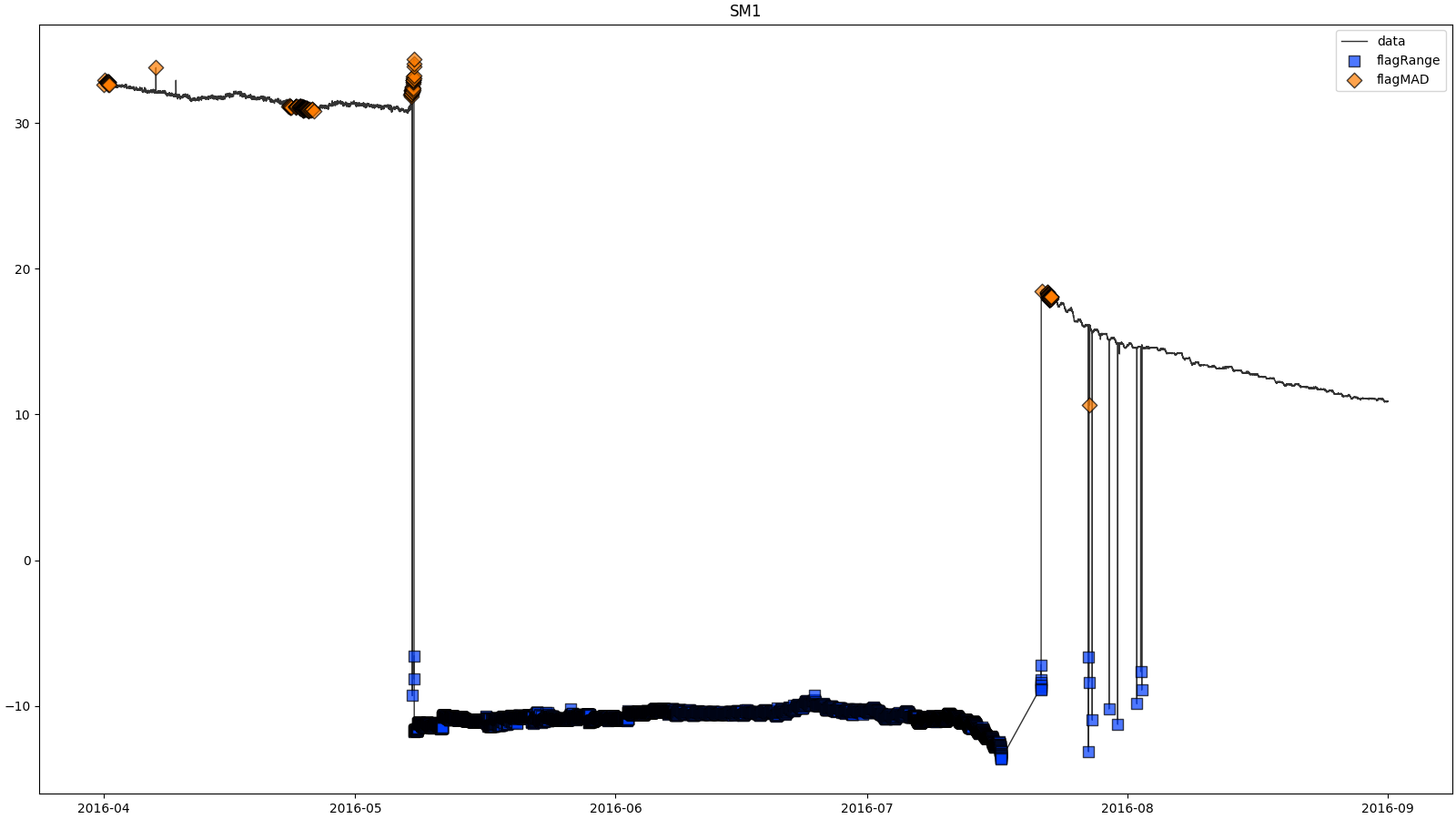

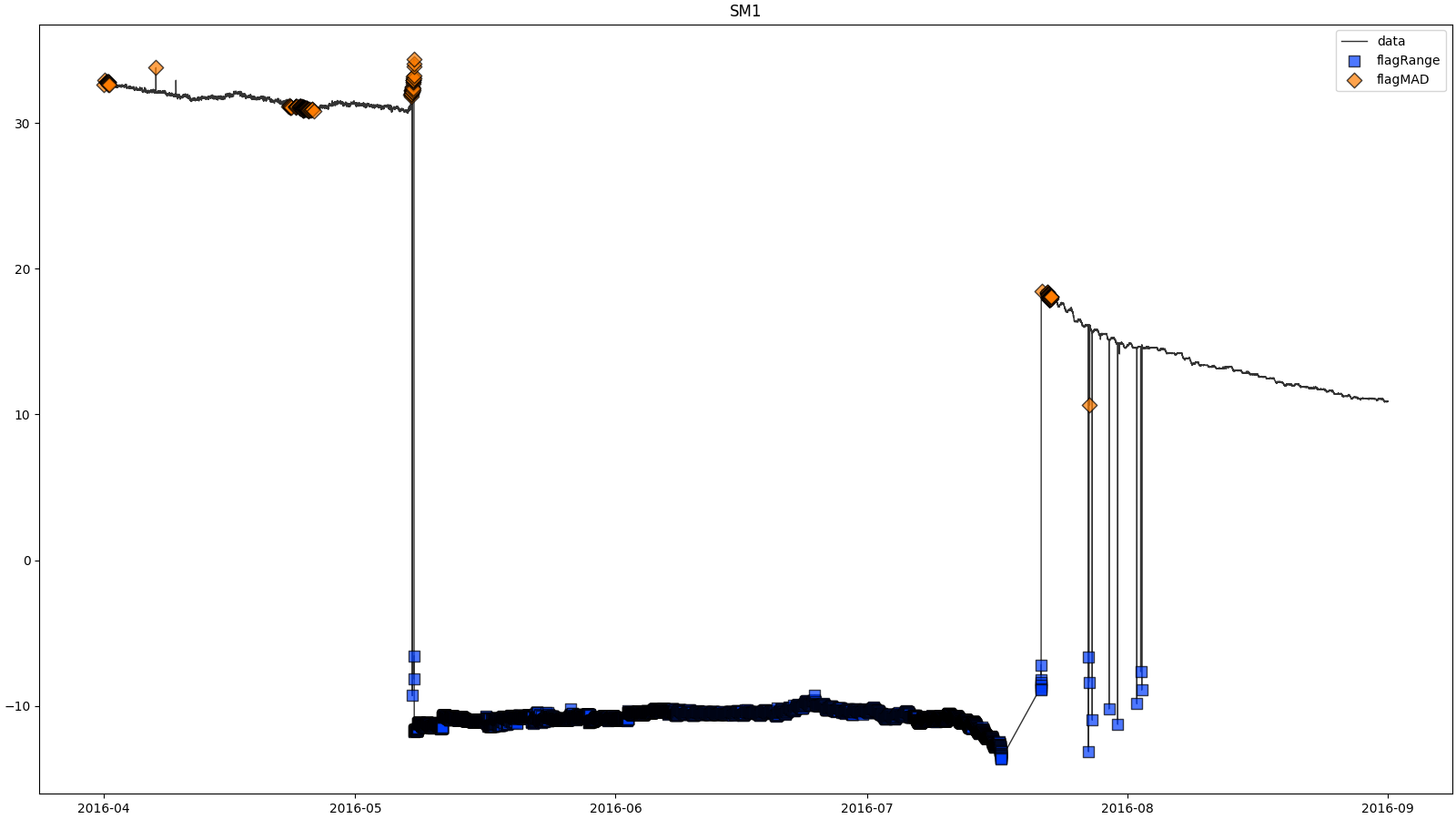

- docs/resources/temp/SM1processingResults.png 0 additions, 0 deletionsdocs/resources/temp/SM1processingResults.png

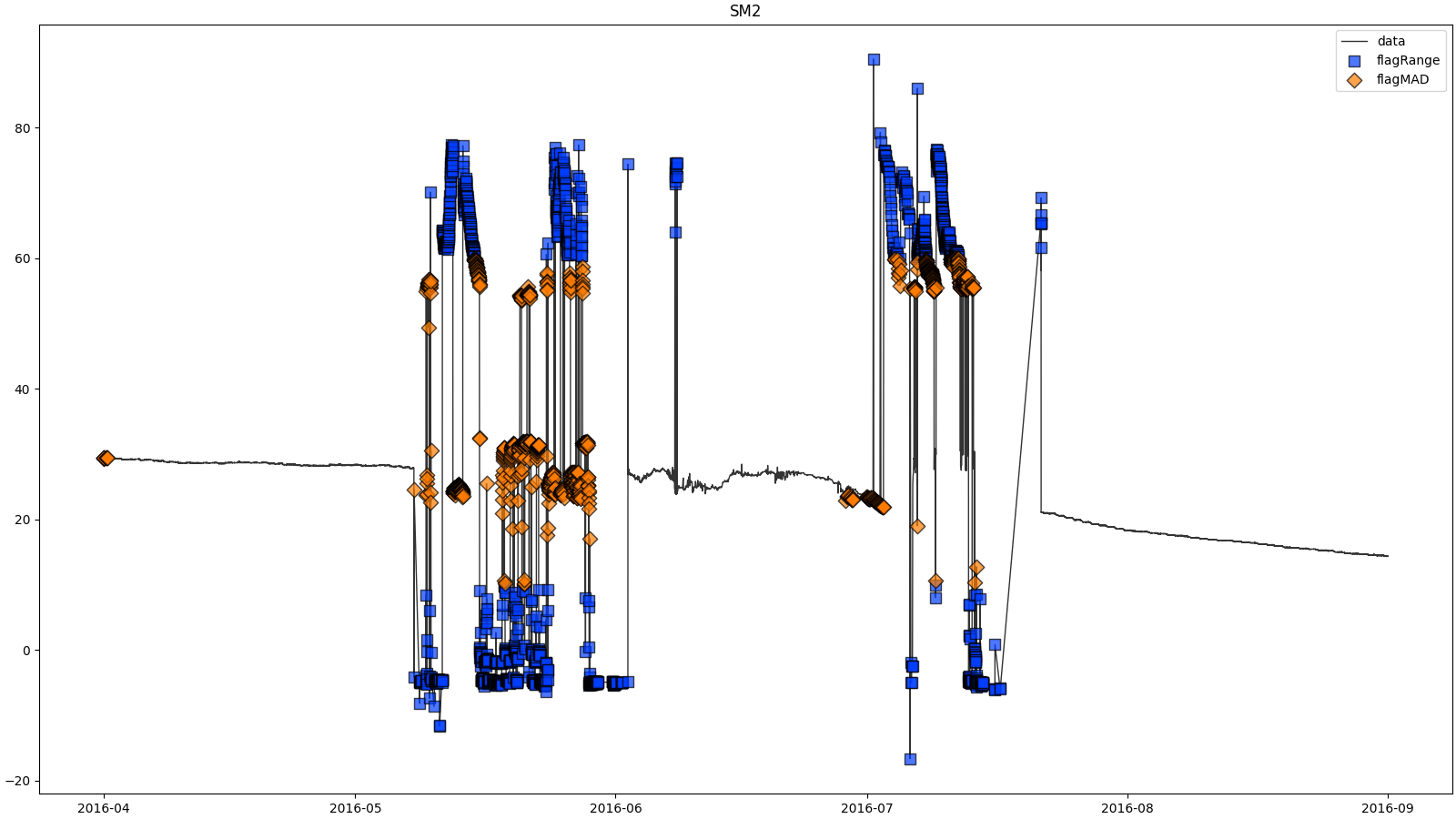

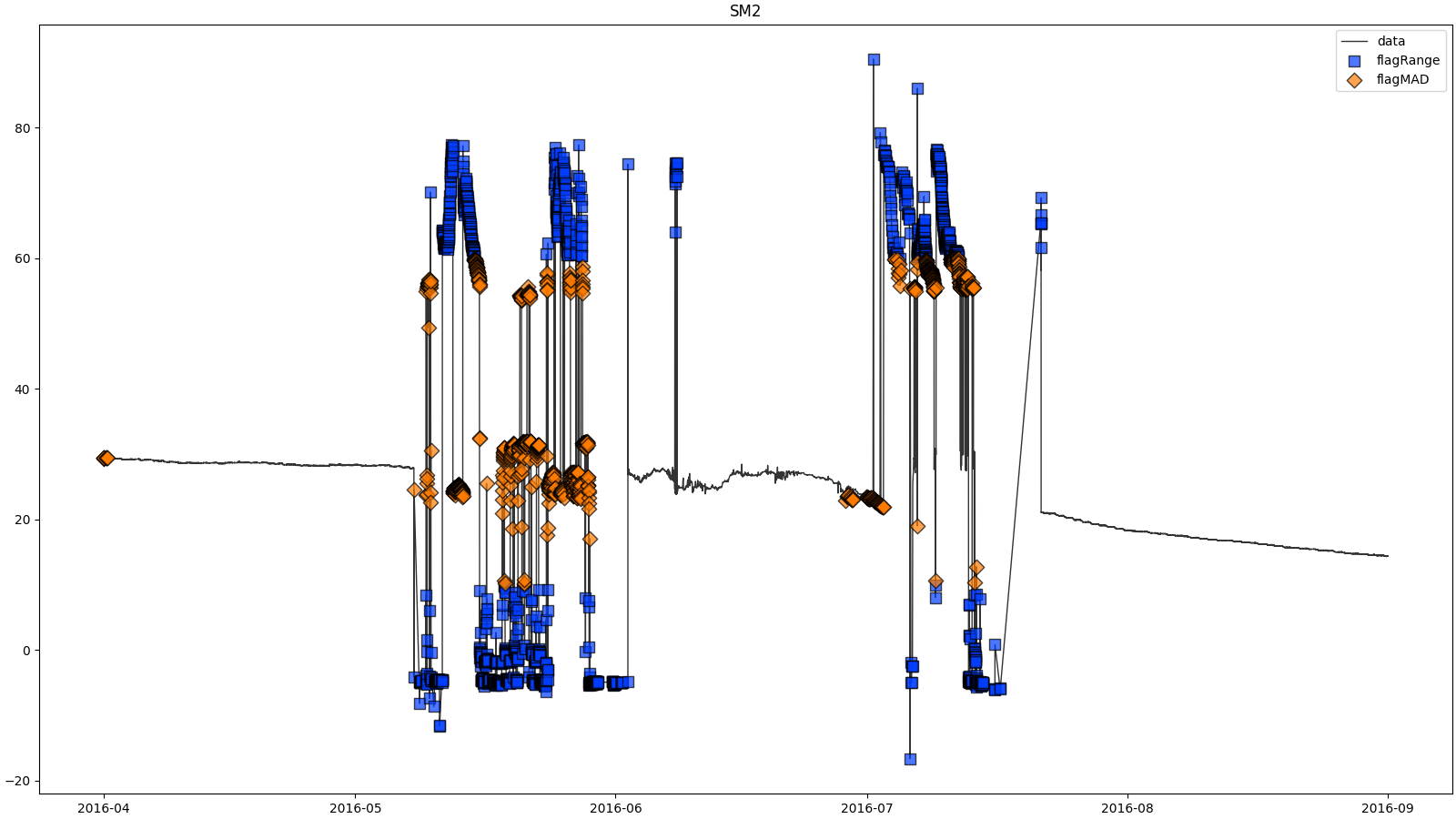

- docs/resources/temp/SM2processingResults.png 0 additions, 0 deletionsdocs/resources/temp/SM2processingResults.png

- requirements.txt 7 additions, 8 deletionsrequirements.txt

- saqc/core/core.py 1 addition, 1 deletionsaqc/core/core.py

- saqc/core/flags.py 3 additions, 5 deletionssaqc/core/flags.py

- saqc/core/history.py 231 additions, 104 deletionssaqc/core/history.py

- saqc/core/register.py 1 addition, 9 deletionssaqc/core/register.py

- saqc/core/translation/__init__.py 1 addition, 0 deletionssaqc/core/translation/__init__.py

- saqc/core/translation/basescheme.py 48 additions, 21 deletionssaqc/core/translation/basescheme.py

- saqc/core/translation/dmpscheme.py 5 additions, 5 deletionssaqc/core/translation/dmpscheme.py

- saqc/core/translation/positionalscheme.py 4 additions, 4 deletionssaqc/core/translation/positionalscheme.py

- saqc/funcs/flagtools.py 9 additions, 1 deletionsaqc/funcs/flagtools.py

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| ... | @@ -4,13 +4,12 @@ | ... | @@ -4,13 +4,12 @@ |

| Click==8.1.3 | Click==8.1.3 | ||

| dtw==1.4.0 | dtw==1.4.0 | ||

| hypothesis==6.55.0 | matplotlib==3.6.2 | ||

| matplotlib==3.5.3 | numba==0.56.4 | ||

| numba==0.56.3 | numpy==1.23.5 | ||

| numpy==1.21.6 | |||

| outlier-utils==0.0.3 | outlier-utils==0.0.3 | ||

| pyarrow==9.0.0 | pyarrow==10.0.1 | ||

| pandas==1.3.5 | pandas==1.3.5 | ||

| scikit-learn==1.0.2 | scikit-learn==1.2.0 | ||

| scipy==1.7.3 | scipy==1.10.0 | ||

| typing_extensions==4.3.0 | typing_extensions==4.4.0 |